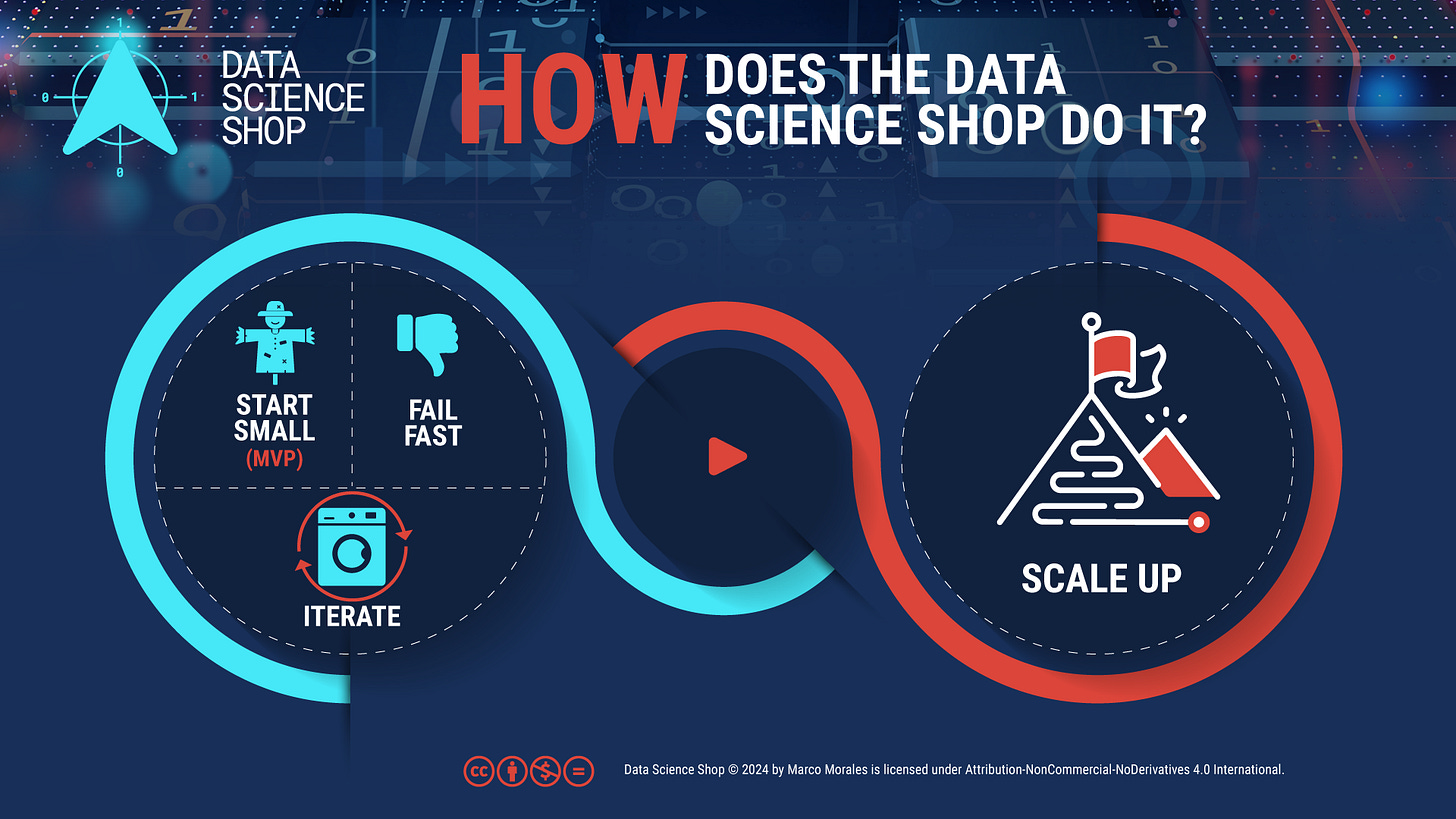

building Data Products: start small, fail fast, scale smart

the method to reduce uncertainty through experimentation

TL;DR

Building Data Products requires structured experimentation due to inherent uncertainties in both data and algorithms - starting small with representative data samples enables quick validation of approaches without major resource investment.

Experimentation here means systematically testing combinations of data quality, algorithmic approaches, and data-algorithm configurations to find the most effective tool for the use case at hand.

The "fail fast" principle in a Data Science Shop focuses on making failure cheap and informative – each failed experiment should provide valuable insights about data-algorithm fit and guide future iterations.

Scaling up should only occur after achieving consistent performance on small-scale experiments, having clear success metrics, understanding limitations, and assessing full-scale computational requirements.

Successfully building a Data Product does not rely on getting things right the first time, but in having a systematic approach to experimentation and iteration that helps you get it right. While we've previously explored the process to build Data Products and the importance of feedback loops and foresight in this process, today I want to dive deep into a crucial methodology that mature Data Science Shops employ when building Data Products: the art of starting small, failing fast, and scaling smart.

The uncertainty challenge in Data Products

Building Data Products is fundamentally different from traditional software development. While both involve uncertainty, Data Products face unique challenges that make experimentation not just useful, but essential:

we don't always know if our data contains the right "signals" to solve the problem

we can't be certain which algorithms will perform best with our specific data and use case

the interaction between data and algorithms may produce unexpected results

real-world behavior and patterns captured in data can change over time

This uncertainty means that even with careful planning and the best tools, we can't guarantee immediate success. The solution? A structured approach to experimentation.

What we mean by experimentation

When we talk about experimentation in this blogpost, we're describing a systematic process of discovery and validation that operates on three key dimensions:

Data Quality and Signal: We experiment with data to understand its fundamental characteristics. Does the data contain the patterns we need? Is the signal-to-noise ratio sufficient? Are there quality issues that need addressing? Each test helps us validate whether our data can support our intended objectives.

Algorithm Selection and Tuning: We experiment with different analytical approaches, from simple statistical methods to complex machine learning algorithms. Which algorithms best capture the patterns in our data? How do different parameters and hyperparameters affect performance? These tests help us identify the most effective tools and settings for our specific use case.

Data-Algorithm Combinations: Perhaps most importantly, we experiment with different ways of combining and configuring both elements. How does feature engineering affect algorithm performance? What data transformations improve our results? The interaction between data and algorithms often holds the key to success.

This type of experimentation is about finding what works in practice - it's empirical, iterative, and focused on building effective solutions .

Why starting small is a strategic advantage

The "start small" principle is more than just a cautious approach – it's a strategic advantage when developing Data Products. Here's why:

Rapid Learning Cycles: working with a small, carefully selected data sample allows us to quickly test hypotheses about both data and algorithms

Resource Efficiency: we can run multiple experiments without committing significant computational resources or time

Clear Signal Detection: working with a smaller dataset often makes it easier to identify if an approach is promising or problematic

Faster Iteration: quick experiments mean we can try more combinations of data and algorithms in less time

The key is to select a representative sample of data that captures the essential patterns and challenges we'll face at scale. This sample should be large enough to be meaningful but small enough to enable rapid experimentation.

Embracing "fail fast" in Data Science

The "fail fast" principle, borrowed from the lean startup movement, takes on a unique flavor in a Data Science Shop. Instead of failing fast with product-market fit, we're failing fast with data-algorithm fit. This means:

quickly identifying when a there isn’t enough “signal” in our data

rapidly detecting when an algorithm isn't capturing the patterns we need

swiftly recognizing when our approach isn’t meeting thresholds in our evaluation metrics

The goal isn't to avoid failure – it's to make failure cheap and informative. Each failed experiment should teach us something valuable about our data, our algorithms, or our approach.

When and how to scale up

The transition from successful small-scale experiment to production-ready Data Product is a critical phase. Here are the key indicators that you're ready to scale:

Consistent Performance: your solution works reliably on your small data sample

Clear Success Metrics: you have well-defined metrics that demonstrate value

Understanding of Limitations: you know what could go wrong and how to handle it

Scalability Assessment: you've evaluated the computational and data requirements for full-scale implementation

Remember: what works well at small scale should work well at large scale, but this needs to be verified, not assumed.

Making failure productive…

The path to successful Data Products isn't about avoiding failure – it's about making failure productive. By starting small, failing fast, and scaling smart, we create a systematic approach to handling the inherent uncertainty when building solutions in a Data Science Shop. This methodology not only reduces risk but also accelerates our path to valuable, production-ready Data Products.

Remember: the goal isn't to get it right the first time, but to get it right when it matters – when your solution is serving real users and solving real problems.

Did we spark your interest? Then also read:

HOW are effective Data Products built in Data Science? to learn about the process to build successful Data Products: the Data Product Cycle

why your Data Product needs feedback loops? to learn why the Data Product Cycle requires continuous feedback loops where later stages inform and shape earlier decisions.

What is the Data Science Shop? to learn more about the roadmap for the operation of Data Science in a business